Vassilis Athitsos

VLM Lab

3D Hand Pose Estimation

The 3D pose of a hand is defined by the joint angles and the

orientation of the hand. Different configurations of joint angles lead

to different hand shapes. The same hand shape can look very different

depending on its 3D hand orientation. The joint angles in a hand can

be specified using 20 parameters. Three additional parameters are

needed for the 3D orientation of the hand. Therefore, 3D hand pose is

specified using a total of 23 parameters.

|

|

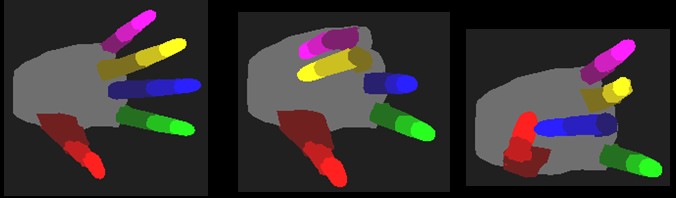

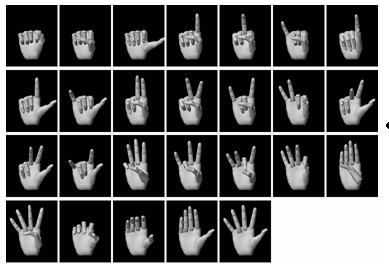

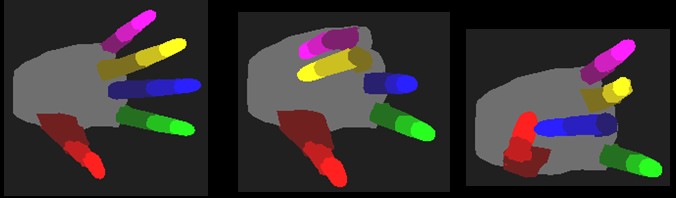

Figure 1: Three different hand shapes. Each hand shape corresponds to

a different configuration of joint angles.

|

|

|

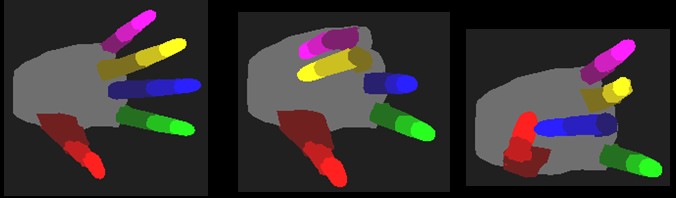

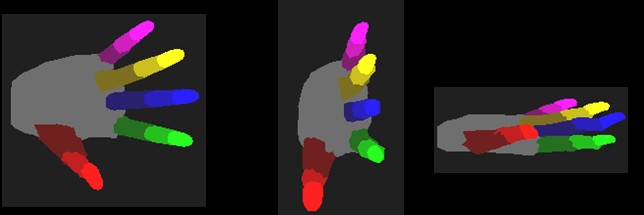

Figure 2: Three different views of a single hand shape. Each view

corresponds to a different 3D hand orientation.

|

In this project, 3D hand pose estimation is formulated as an image

database indexing problem. A large database of synthetic hand images

is created, that contains images of various hand shapes under various

3D orientations. For each synthetic image, the system knows the hand

shape and 3D orientation that was used to create it. To estimate the

3D hand pose of an input image, the most similar images in the database

are retrieved. The hand pose parameters associated with those

images are used as estimates for the hand pose in the input image. The

database images are created using computer graphics.

|

|

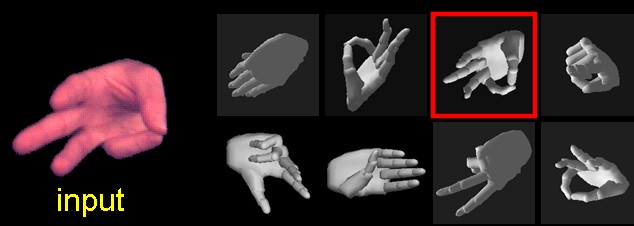

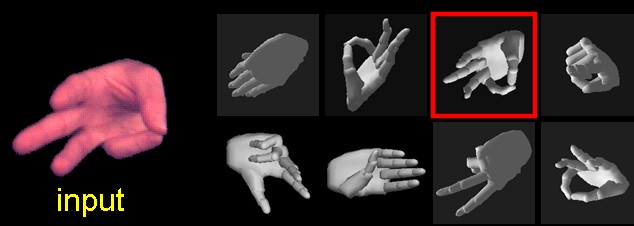

Figure 3: System input and output. Given the input image, the system

goes through the database of synthetic images in order to identify the

ones that are the most similar to the input image. Eight examples of

database images are shown here, and the most similar one is enclosed

in a red square. The database currently used contains more than

100,000 images.

|

Main Challenges

Building a Large Database

In order to correctly estimate the hand pose in an image, the database

must contain a synthetic image with similar hand pose. A question that

arises is: how many database images do we need in order to guarantee

that for every possible hand pose there will be a similar database

image? We do not have an answer to this question at this point. The

answer partly depends on a definition of when two images are

"similar"; the more stringent our criteria for similarity are, the

more database images we need.

In our current implementation, the database includes images of 26 hand

shapes. Each hand shape is rendered from 4128 different 3D hand

orientations. Overall, the database contains 107,328 images. Our

sampling of 26 hand shapes is definitely inadequate to capture the

entire range of possible hand shapes. On the other hand, our sampling

of 3D hand orientations is an approximately uniform and dense sampling

of the space of 3D orientations.

|

|

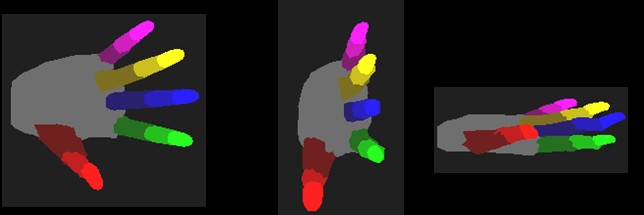

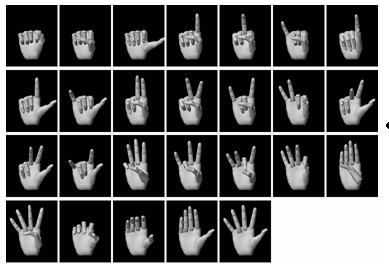

Figure 4: The 26 hand shapes used to generate the over 100,000

database images.

|

Recognizing a fixed number of hand shapes is not as general as recognizing

arbitrary 3D hand pose. However, it can be sufficient for many gesture

recognition applications. For example, the number of basic hand shapes used

in American Sign Language (ASL) is less than 100, which means that our

framework is applicable to recognizing hand pose in the ASL context.

Defining Accurate Similarity Measures

Given an input image, the system must retrieve the most similar

database images. Therefore, we need to address the question: how do we

define similarity? We need to identify similarity measures that return

high similarity value for images of similar hand pose, and low

similarity values for images of different hand poses.

We have experimented with several different similarity measures: the

chamfer distance, geometric moments, edge orientation histograms, finger

matching, and line matching. Our experiments show that the chamfer distance

is the most accurate similarity measure, but also the most computationally

expensive measure. Current work focuses on developing similarity measures

that can improve the accuracy and efficiency of the system. We are

particularly interesting in similarity measures that are robust to noise,

clutter, and segmentation errors. Our line matching method, described in

our CVPR 2003 publication, is a first result in that direction.

Achieving Efficient Retrieval

For every input image, the system has to evaluate its similarity to

all database images. That task can be very time-consuming, given the

size of the database. On the other hand, in order for the system to

be useful for human-computer interaction applications, the retrieval

time must be done at interactive speeds.

We have found that retrieval efficiency is greatly improved if we use

multi-step retrieval: first use computationally cheap similarity measures

(like finger matching and geometric moments) to reject the bulk of database

images, and then use more accurate similarity measures (like the chamfer

distance) to evaluate the remaining database images. Retrieval accuracy, on

the other hand, is pretty similar to the accuracy attained by applying all

similarity measures to all images.

Another direction that we have explored for retrieval efficiency is

deriving efficient approximations of computationally expensive

similarity measures, like the chamfer distance. In our GW 2003 and

CVPR 2003 publications we define approximations of the chamfer

distance using a technique called Lipschitz embeddings. These

approximations are ideal for the first retrieval steps, that identify

the most likely matches. The exact measures can then be applied only

to the selected matches, so that the overall efficiency is

improved. Our CVPR 2004 paper introduces the BoostMap method, a

general method for constructing embeddings. Applied on hand images,

BoostMap greatly improves the approximation of the chamfer distance.

References

The papers in Cues in Communications 2001 and

Face and Gesture 2002 describe the overall framework, the

assumptions and the goals of the project. The papers in CVPR

2003 and the Gesture Workshop 2003 focus on methods to

improve efficiency and accuracy in the presense of clutter and

segmentation errors. The paper in CVPR 2004 introduces a

general embedding method that can be used to efficiently approximate

the chamfer distance.

-

3D Hand Pose Estimation by Finding Appearance-Based Matches in a Large

Database of Training Views.

Vassilis Athitsos and Stan Sclaroff.

IEEE Workshop on Cues in Communication, December 2001.

[Postscript 2.5MB]

[Compressed Postscript 597KB]

[ PDF 367KB]

Extended version (Technical Report BUCS-2001-021):

[

Postscript 4.0MB]

[

Compressed Postscript 777KB]

[

PDF 470KB]

-

An Appearance-Based Framework for 3D Hand Shape Classification and

Camera Viewpoint Estimation.

Vassilis Athitsos and Stan Sclaroff.

IEEE Conference on Automatic Face and Gesture Recognition,

pages 45-52, May 2002.

[Postscript 1.7MB]

[Compressed Postscript 682KB]

[PDF 549KB]

Extended version (Technical Report BUCS-2001-022):

[

Postscript 6.3MB]

[

Compressed Postscript 1.3MB]

[

PDF 716KB]

-

Database Indexing Methods for 3D Hand Pose Estimation.

Vassilis Athitsos and Stan Sclaroff.

Gesture Workshop, pages 288-299, April 2003.

[Postscript 3.7MB]

[Compressed Postscript 587KB]

[PDF 267KB]

-

Estimating 3D Hand Pose from a Cluttered Image.

Vassilis Athitsos and Stan Sclaroff.

IEEE Conference on Computer Vision and Pattern

Recognition (CVPR), pages 432-439, June 2003.

[Postscript 7.4MB]

[Compressed Postscript 1.7MB]

[PDF 594KB]

-

BoostMap: A Method for Efficient Approximate Similarity Rankings.

Vassilis Athitsos, Jonathan Alon, Stan Sclaroff, and George Kollios.

IEEE Conference on Computer Vision and Pattern

Recognition (CVPR), pages 268-275, June 2004.

[Postscript 2.6MB]

[Compressed Postscript 588KB]

[PDF 229KB]

-

Vassilis Athitsos, Jonathan Alon, Stan Sclaroff, and George Kollios.

BoostMap: An Embedding Method for Efficient Nearest Neighbor Retrieval.

IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI)

,

30(1), pages 89-104, January 2008.

[Postscript 51MB]

[PDF 3.2MB]

[Pre-print in PDF with color images 632KB]

-

Michalis Potamias and Vassilis Athitsos.

Nearest Neighbor Search Methods for Handshape Recognition.

Conference on Pervasive Technologies Related to Assistive Environments (PETRA), July 2008.

[Postscript 10.4MB]

[PDF 259KB]

Vassilis Athitsos

VLM Lab